“Magic doesn’t fool you because you’re stupid,” Penn Jillette said on the Jonathan Ross show in 2019.

Magic fools you because it’s stupid. … The human brain wants to have beautiful, wonderful experiences. So, if you want to fool somebody, you come up with something really, really stupid.

Jillette then brought comedian Rebel Wilson onto the stage and through misdirection and sleight of hand, managed to perform an illusion that was transparent to everyone in the room and even to everyone at home, but not to Rebel Wilson. As Jillette put it, Wilson got to see the magic. We got to see the stupid.

Jillette wasn’t offering commentary on the state of large language models (or large reasoning models) in 2025, but he might as well have been.

Two recently released papers argue that what industry has been calling “reasoning” isn’t really reasoning at all.

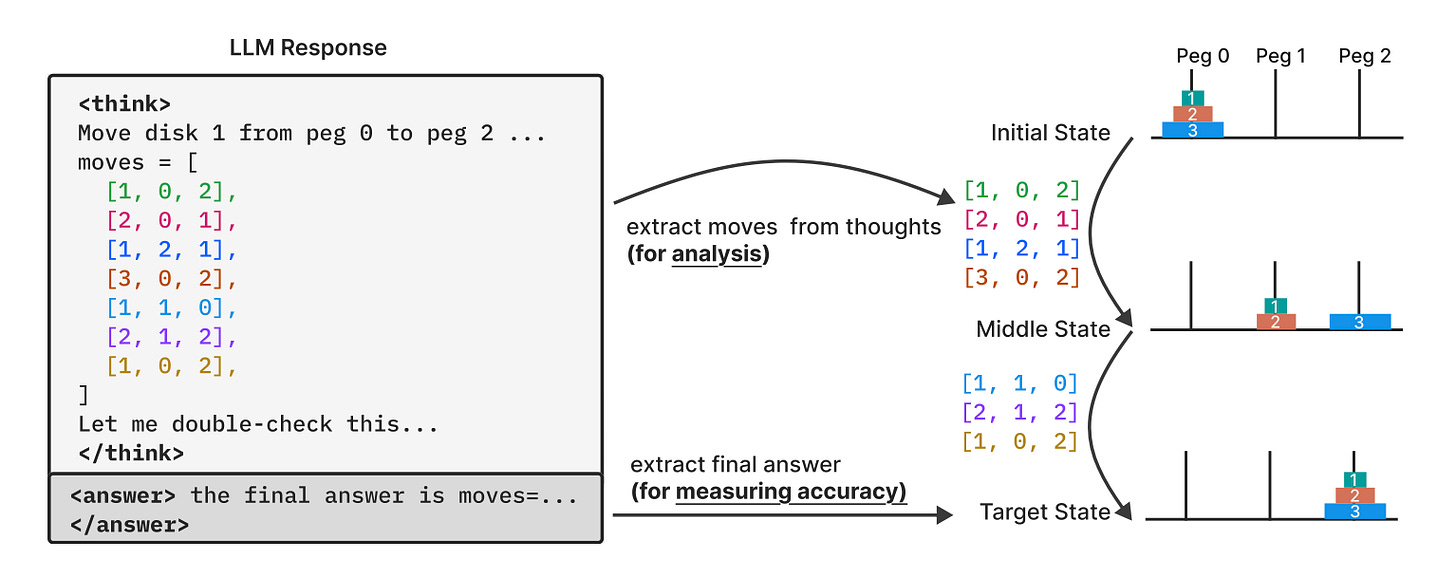

In Apple’s recent paper, “The Illusion of Thinking,” researchers compared the performance of large language models against the performance of language reasoning models. So these pairings pit, for instance, Anthropic’s Claude 3.7 against Claude 3.7 with “extended thinking mode;” and it compares Gemini against Gemini Thinking and DeepSeek-V3 against DeepSeek-R1, etc.

The tasks under consideration were puzzles of varying complexity. Here’s just one example. Do you remember the baby or toddler toy that has a single peg with several disks of different colors and sizes? When the disks stacked in order with the largest on the bottom the stack is sort of shaped like a Christmas tree. There’s a well-known logic puzzle call the Tower of Hanoi that presents participants with a similar peg and stacked disks except the disks are stacked randomly. Then there are two other empty pegs available to the participant. She can move disks from the original stack to one of the two other pegs. Ultimately, the participant is supposed to produce a stack in order from largest at the bottom to smallest on top. This is one of the tasks the Apple researchers gave the models. By adding or removing disks, the researchers can throttle the complexity of the task.

As is often the case in papers with flashy titles, the substance of the paper’s conclusions don’t really live up to the title’s hype. The researchers didn’t really show that reasoning in large reasoning models is illusory.

Here’s what they did find. In puzzles of varying complexity, model performance can be categorized in three different regimes:

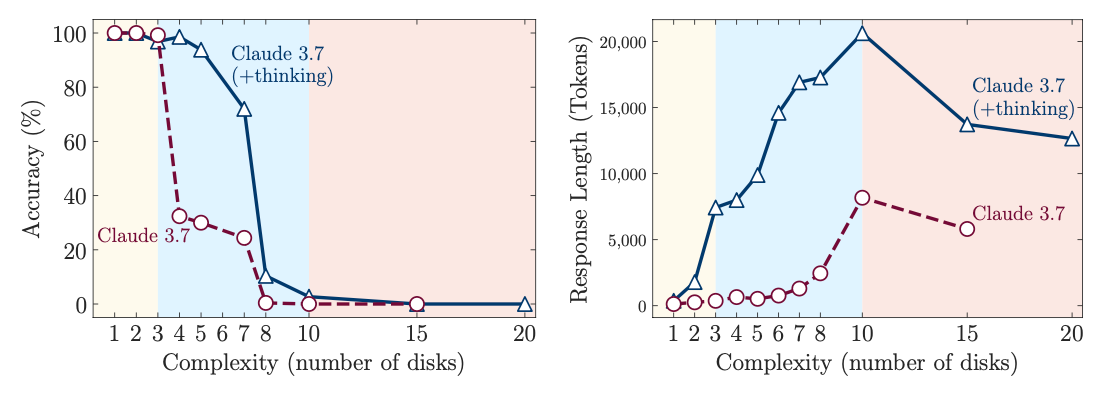

In simple tasks, the non-reasoning models out-performed the reasoning models in both accuracy and efficiency. The researchers found that in simple tasks, even when the reasoning models did land on the correct answer, they continued to try alternatives. This inefficiency, combined with the fact that large reasoning models already employ computationally expensive chain of thought processes, means that the computing cost for a given simple task is higher for large reasoning models than it is for the large language model without “reasoning” capabilities. So, in this regime of simple tasks, the finding is a kind of double-whammy. If you use a large reasoning model on a simple reasoning task, not only are you more likely to get the answer wrong, but it’s also going to cost you more to do it.

In moderately complex tasks, the reasoning models did tend to arrive at the correct answer more often than the non-reasoning models, but only after considering lots of false alternatives. Here, there is a tradeoff between accuracy and efficiency. In other words, you can use a large reasoning model to arrive at the right answer to a moderately complex reasoning task, but it’ll cost you.

Finally, for both large language models and large reasoning models, the researchers found that beyond a certain complexity threshold, model performance collapses. For example, when the researchers used Claude 3.7 to solve the disk task, the large language model by itself was able to conduct the task with only three disks, but it was unable to do it with any more than three. The large reasoning model version could do up to six, but beyond that, its performance collapsed, too.

One of the most important findings in the paper is that even when the researchers gave the model the answer, that didn’t improve performance. For instance, there is a known algorithmic solution to the Tower of Hanoi puzzle. It’s a series of sequential steps that ultimately yields the right solution for the task. When researchers provided that algorithmic solution in the prompt, the large reasoning models did not perform any better than when they weren’t given the algorithmic solution.

So, what can we learn from this paper? The answer is summarized nicely in a second paper published at about the same time. As Jintian Shao and Yiming Cheng put it in their paper, “[Chain of Thought] is Not True Reasoning,”

By forcing the generation of intermediate steps, Chain-of-Thought (CoT) leverages the model’s immense capacity for sequence prediction and pattern matching, effectively constraining its output to sequences that resemble coherent thought processes.

In other words, large language models—even their close cousins, large reasoning models—are not actually doing what you and I do when we reason. They are only imitating the words that we use. And yet, by imitating the words that we use, they are able to mimic human reasoning.

Here are the authors again

The journey towards truly intelligent systems is long, and distinguishing clever mimicry from genuine understanding is a critical milestone.

This is yet another example of why I’m such a radical centrist in the AI debates. It is not the case that there’s nothing to see here. But it is also not the case that AI is on the verge of human-level performance.

The capability made possibly by generative AI, including so-called “reasoning” models is real and its important. But it is also limited. I know there are lots of voices out there who think that, in very short order, we’ll be able to use generative AI to solve the world’s hard problems. What the Apple researchers showed empirically, and what Shao and Cheng argued theoretically, is that the performance of foundation models—even large reasoning models—depends upon access to the relevant problems in the training data.

How are we able to judge these models’ performance on puzzles like the Tower of Hanoi and River Crossing? Because we humans have already solved those puzzles. We are just not anywhere close to being able to feed generative AI the world’s hardest problems—solving world hunger, managing nuclear deterrence, or building a plan for the climate—and then just hitting the “I believe” button when we get the outputs.

It’s like Penn says (sort of), AI doesn’t fool us because we’re stupid. Our brains just want to have these beautiful, wonderful experiences. When we see AI do something that looks so human, like reasoning, we want to believe its reasoning. But these papers (and many other ls like them) are like Penn Jillette showing us how he does the trick. Next token prediction is still just next token prediction even when it’s really good.

Do pay attention to the man behind the curtain. AI isn’t magic.

Credit where it’s due

Views Expressed are those of the author and do not necessarily reflect those of the US Air Force, the Department of Defense, or any part of the US Government.