I’m just over a month into this Substack adventure and I have a favor to ask. If you like what you read, would you consider sharing it with someone in your network who might like it, too?

In C. S. Lewis’s Til We Have Faces (one of my favorite books of all time), the main character, Orual, describes the lengths to which her tutor went to obtain books from across the known world, ultimately building the kingdom’s “noble library” of eighteen works. At the end of the list of books which includes Socrates and Heraclitus, she mentions “a very long, hard book (without metre) which begins All men by nature desire knowledge.”

This is Lewis’s subtle nod to Aristotle’s Metaphysics—it is, indeed, a long, hard book. But don’t worry! Today, we’re not going to make it past the first sentence.

If you take the LinkedIn commentariat seriously on the state of AI, you’ve probably noticed the same phenomenon I have. There’s a debate over whether reasoning models really understand the subject matter they’re given. Unfortunately, this debate often devolves into a “yes they do” / “no they don’t” structure.

Here’s none other than Andrew Ng—a well-respected pioneer in the field of deep learning—weighing in.

“Do Large Language Models really ‘understand’ the world,” Ng tweeted. “Evidence … shows LLMs build models of how the world works, which makes me comfortable saying they do understand.”

For a deeper look at the paper to which Ng was responding and a more thorough account of world models, I encourage you to read Melanie Mitchell’s two posts on the topic. Mitchell is another well-respected technical expert in the field.

Here, I don’t want to focus on the technical means by which models learn, but instead on what we mean by “understand.”

This question—whether AI can understand—is difficult to answer in part because it’s so hard for us to know what we mean when we talk about human understanding. It’s hard for us to understand understanding.

The word Aristotle uses for “to know” in that opening line is eidenai (εἰδέναι). I took an undergraduate class on the Metaphysics at Boston University. We used Hippocrates G. Apostle’s translation (such a cool name). Apostle chose to translate this word, “understanding.” So, Aristotle says, “all men by nature desire understanding.”

As we sat around a conference table on the third floor of the College of Arts and Sciences building, professor David Roochnik led a seminar discussion for two hours on this one sentence.

A student asked, “what does he mean by ‘understanding.’”

I’ll admit that I had never thought about this question before. What do we mean by understanding. As Roochnik pointed out, the word itself is metaphorical. When we understand, are we standing under something? Is something standing under us?

Aristotle’s Greek word, eidenai, meant most directly “to see” with one’s physical eyes. But in Greek, as in English, that verb “to see” is used to refer to “seeing” in the mind’s eye, too. We can find the same metaphorical language in English. For instance, when I say, “ah, I see what you mean,” I don’t actually see with my eyes. I conceive, or perceive, or know with my mind.

Something similar is happening with our English word, “understand.”

I’ll spare you the lengthy etymology, but suffice it to say that the “under” in “understanding” doesn’t come from our ordinary modern use of “under” to mean “beneath.” Instead, it’s closer to the Latin, “inter,” meaning “between” or “among” (and also the Greek, “entera” and the Sanskrit, “antar,” which similarly convey being “between” or “among”).

To understand a thing is to stand between or among it. I understand what swimming is because I’ve been in a pool and in the ocean, where I have felt the water press against my skin. I understand G forces because I have been in the jet and felt the G-forces on my body. I understand what “winter wonderland” means because I’ve been in the midst of a winter wonderland—perhaps most memorably, walking in the middle of Commonwealth Avenue in the middle of the night in the middle of college in the middle of a noreaster. I understand it because I was in the middle of it.

Our use of “understanding” that we inherited from Latin, Greek, and Sanskrit is an embodied kind of knowledge. It is cognitive, maybe, but cognitive in that special human sort of way. It is the kind of cognition that requires, not just the ghost in the machine, but the machine itself.

Philosophers have wrestled with the so-called “mind/body” problem at least since Descartes. The 17th century French philosopher argued that we should consider mind and body as two different entities. The non-physical mind controls the physical body like a ghost controlling a machine. Though a very crude summary, this has become the dominant modern view. The mind and the body are different things.

But can this modern dualism with which we have become so comfortable really capture the kind of understanding the etymology of “understanding” suggests? In other words, is my mind—independent of sense experience—capable of understanding? Or is sense experience a necessary condition for understanding—for knowing by being between or among or in the midst of?

If understanding is knowledge from being between and among, is understanding possible without a physical body that can literally be between and among the things we seek to understand?

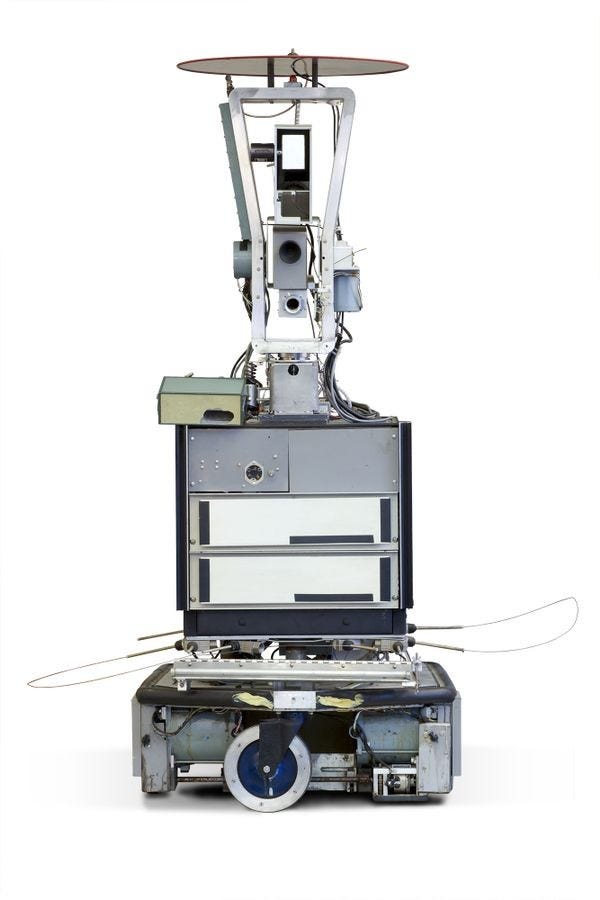

These questions are especially interesting because the field of artificial intelligence was once heavily focused on building intelligent machines that can interact with the physical world. The early AI researchers wanted to build robots that are like us in that they would have something like a mind (software control mechanisms) and something like body (hardware capable of perceiving the world around them).

Shakey the robot is a great example from the 1960s. The ground-mobile robot looks like the love child of a passionate affair between an air fryer and a patio heater.

For Shakey to move about the room autonomously, it needed not only the processing power to make “decisions,” but it also needed the cameras and laser scanners to be able to sense objects in the room. In 21st century terms, it had to “sense” and then “make sense” of the world around it. (There’s a great overview of Shakey and its sensors in the first two minutes of this video—and you’ll notice in the voiceover that participants in that 1973 debate are asking some of the very same questions we’re still wrestling with today).

As the AI field grew to maturity, researchers learned just how difficult the sensing and sense-making challenges are. That work didn’t stop, of course. We can see the practical benefits of that research every time we pass a Tesla in autonomous mode on the highway or even when my 2017 Subaru automatically senses that I’ve drifted too close to the lane line and nudging the steering wheel back toward the center. But the AI research community did split into different tracks.

As the robot research progressed in one direction, pure software solutions developed in another. Breakthroughs in software in this century gave us first deep learning for computer vision object classification, and then rapid advances in natural language processing, and eventually the large language models that dominate headlines today.

This historical phenomenon, though, has brought us to a place where we’re now asking whether pure software solutions—with no sense perception and no sense-making ability—can understand the world.

This is the difficulty that lay beneath (that stands under, if you like) the discourse on large language models and understanding. Can they get answers right? Of course. Can they complete tasks that have traditionally required human intelligence? Absolutely. Can they understand? Well, that depends on what we mean by understanding. But they certainly cannot stand between, among, within, or amidst our world.

This is one of the under-discussed limitations of current trends in AI. The big bet AI companies have made on scale suggests that if we can only get enough training data, and enough computing power, and complex enough model architectures, then we will be able to build machines that can understand our world.

But there is an inherent limit in this approach in that, unlike us humans, these machines aren’t trained on our world, not really. They’re trained on our data, which is only an imperfect facsimile of our world. Can a large language model ever stand between and among the things in our world—can it understand our world? Or will it only ever stand between and among our data?

I don’t know why—or at least, I don’t know all the reasons why—the big AI labs have failed to deliver the general artificial intelligence they continue to promise is right around the corner. But one reason might be that human-level intelligence just is embodied intelligence. You and I can understand because we can stand between and among the things in our world. No matter how large the training datasets and how expansive (and expensive) the computing power, large language models will never be able to do that.

Large language models can learn to use our languages, but I’m not sure they can understand our world.